Simply, a technology that allows applications to be written once and run anywhere

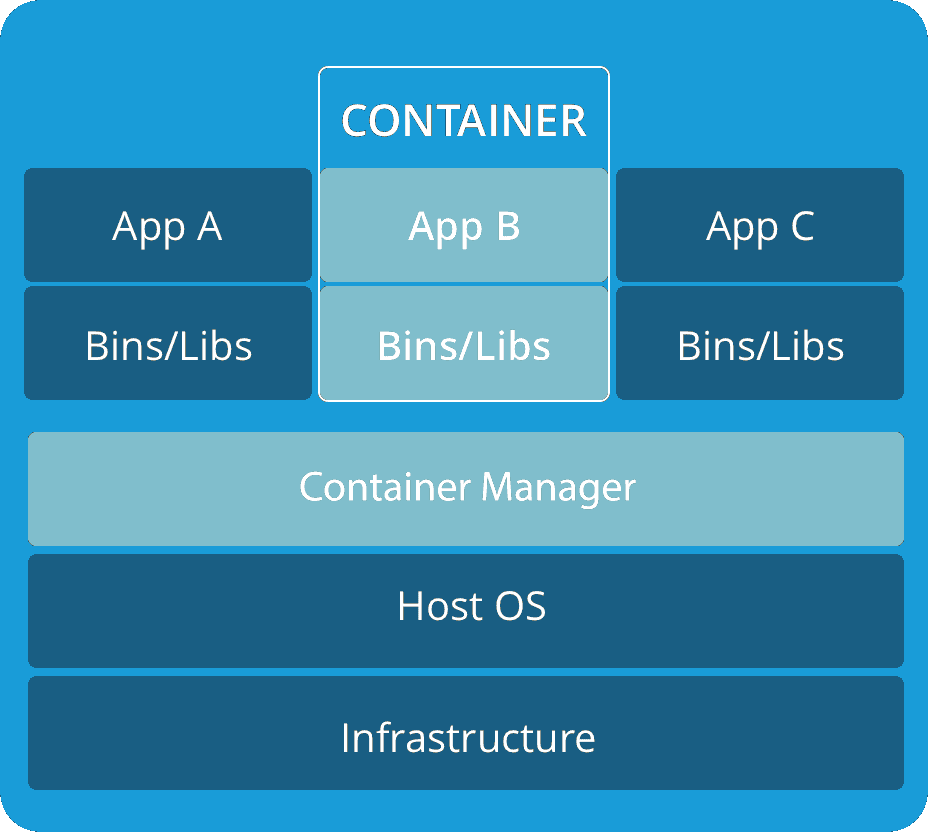

Containers are lightweight software units that bundle the application, its dependencies, and its configuration in a single image, running in isolated user environments on a traditional operating system on a traditional server or in a virtualized environment. by taking the advantage of a form of operating system (OS). virtualization in which features of the OS (in the case of the Linux kernel, namely the namespaces and cgroups primitives) are leveraged to both isolate processes and control the amount of CPU, memory, and disk that those processes have access to. Containers first appeared decades ago with versions like FreeBSD Jails and AIX Workload Partitions, but most modern developers remember 2013 as the start of the modern container era with the introduction of Docker.

they are often referred to as “lightweight,” meaning they share the machine’s operating system kernel and do not require the overhead of associating an operating system within each application. Containers are inherently smaller in capacity than a VM and require less start-up time, allowing for more containers to run on the same compute capacity as a single VM. This drives higher server efficiencies and, in turn, reduces server and licensing costs.

Why use it?

let's discuss some of the benefits

- Portability between different platforms and clouds—it’s truly written once, run anywhere

- Agility that allows developers to integrate with their DevOps environment.

- Improved security by isolating applications from the host system and each other.

- Flexibility to work on virtualized infrastructures or bare metal servers

- Easier management since install, upgrade, and rollback processes are built into some platforms like the Kubernetes platform.

- Efficiency through using far fewer resources than virtual machines and delivering higher utilization of computing

For example, when a developer transfers code from a desktop computer to a virtual machine (VM) or from a Linux to a Windows operating system. Containerization eliminates this problem by bundling the application code together with the related configuration files, libraries, and dependencies required for it to run. This single package of software or “container” is abstracted away from the host operating system, and hence, it stands alone and becomes portable—able to run across any platform or cloud, free of issues.

The history of containers

The container concept was imagined as early as the 1970s

1979: Unix V7

During the development of Unix V7 in 1979, the chroot system call was introduced, changing the root directory of a process and its children to a new location in the filesystem.

This advance was the beginning process of isolation: segregating file access for each process. Chroot was added to BSD in 1982.

2000: FreeBSD Jails

FreeBSD Jails allows administrators to partition a FreeBSD computer system into several independent, smaller systems – called “jails” – with the ability to assign an IP address for each system and configuration.

2001: Linux VServer

Like FreeBSD Jails, Linux VServer it’s a jail mechanism that can partition resources (file systems, network addresses, memory) on a computer system.

2004: Solaris Containers

In 2004, the first public beta of Solaris Containers was released that combines system resource controls and boundary separation provided by zones.

2005: Open VZ (Open Virtuzzo)

This is an operating system-level virtualization technology for Linux that uses a patched Linux kernel for virtualization, isolation, resource management and checkpointing.

2006: Process Containers

Process Containers (Launched by Google in 2006) was designed for limiting, accounting and isolating resource usage (CPU, memory, disk I/O, network) of a collection of processes.

2008: LXC

LXC (Linux Containers) was the first, most complete implementation of Linux container manager.

2011: Warden

Warden can isolate environments on any operating system, running as a daemon and providing an API for container management

2013: LMCTFY

Let Me Contain That For You (LMCTFY) kicked off in 2013 as an open-source version of Google's container stack, providing Linux application containers.

2013: Docker

the stage that containers exploded in popularity. We will dive deep in the next articles

Containers vs. Virtual machines

The big question that come when we talk about containers is: what are the differences between containers and Virtual machines.

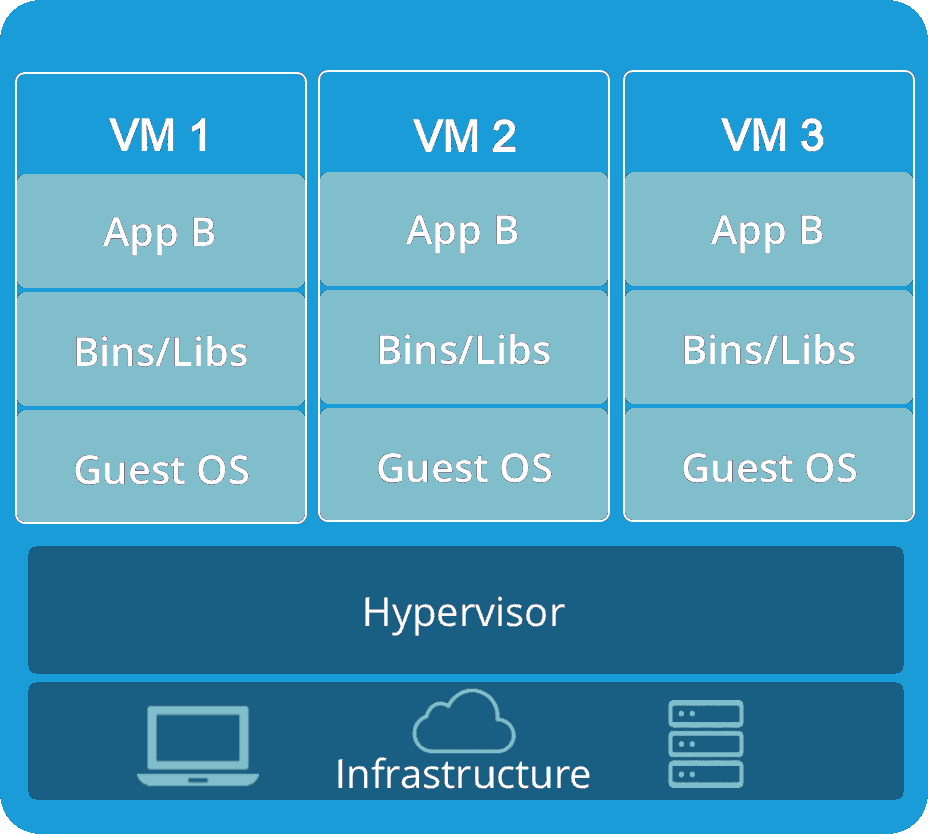

Virtual machine (VM)

It’s an emulation of a physical computer that enable teams to run what appear to be multiple machines, with multiple operating systems, on a single computer. VMs interact with physical computers by using lightweight software layers called hypervisors. Hypervisors can separate VMs from one another and allocate processors, memory, and storage among them.

Containers

Rather than spinning up an entire virtual machine, containerization packages together everything needed to run a single application or microservice (along with runtime libraries they need to run). The container includes all the code, its dependencies and even the operating system itself. This enables applications to run almost anywhere — a desktop computer, a traditional IT infrastructure or the cloud.

| CONTAINER | VIRTUAL MACHINES (VMS) | |

| Boot-Time | Boots in a few seconds. | It takes a few minutes for VMs to boot. |

| Runs on | Make use of the execution engine. | VMs make use of the hypervisor. |

| Memory Efficiency | No space is needed to virtualize, hence less memory. | Requires entire OS to be loaded before starting the surface, so less efficient. |

| Isolation | Prone to adversities as no provisions for isolation systems. | Interference possibility is minimum because of the efficient isolation mechanism. |

| Deployment | Deploying is easy as only a single image, containerized can be used across all platforms. | Deployment is comparatively lengthy as separate instances are responsible for execution. |

| Performance | Limited performance | Native performance |

Conclusion

Containerization Is a major trend in software development and is its adoption will likely grow in both magnitude and speed.

Large players like Google and IBM are making big bets on containerization. Additionally, an enormous startup ecosystem is forming to enable containerization.

Containerization’s proponents believe that it allows developers to create and deploy applications faster and more securely than traditional methods. While containerization is expensive, its costs are expected to fall significantly as containerization environments develop and mature. Containerization is thus likely to become the new norm for software development.

Add new comment